If you’re interested to convert from survey (one-step) based listening and move up the listening maturity ladder, to introduce dialogue (two-step) based listening and drive actionability? Just let us know, and trust us: it is easier than you imagine.

Just bear in mind a few things:

- employees are ready (for a long while) to leave behind survey fatigue and lack of action

- managers are ready: they want to listen to employees, yet, it should be tailored and related to business

- you can take with you any question from your previous platform and re-use these, after some re-designing that we showed you in part 1 of this blog; you can even keep the closed-end part to keep on collecting scores and compare notes with previous years and cross-departments

- you can also choose CircleLytics Dialogue as add-on to your current platform and take our data in to your platform to leverage insights and get to higher, actionable quality data.

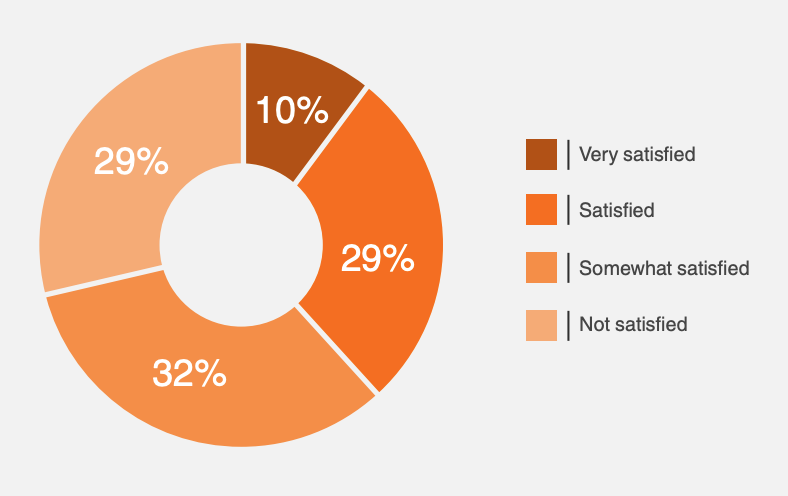

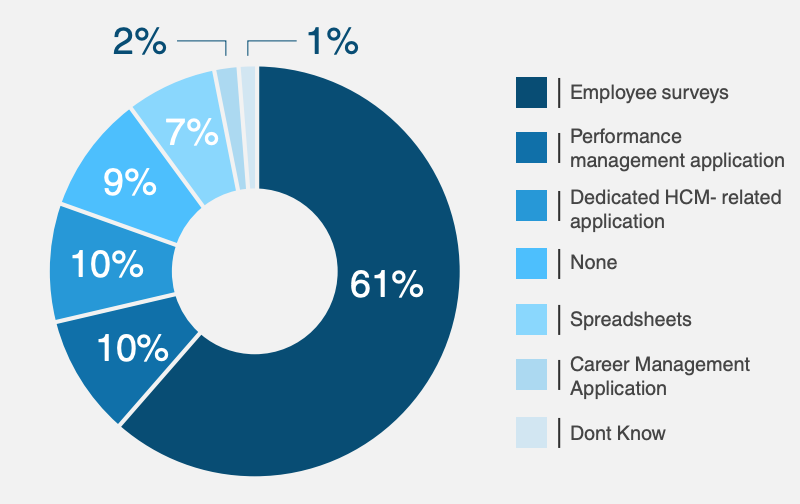

We share this Ventana Research outcome, showing the absence of convincing satisfaction with regard with current technology, and surveys being the dominant technology in the current arena.

Now how about adding dialogue to complement and elevate surveys

Let’s now take a few different questions, from Qualtrics, and examine and explain how strategy 2) would work out: adding-on dialogue or separate, followup dialogue based listening, for the situations in which your company wants to keep surveys for some purposes but.

Why would you do so by the way? We can imagine, in addition to the reasons mentioned in the above, you might want a transformational phase: experiment with new listening solutions, while not giving up on incumbent ones. Maybe for rational reasons to compare notes, ie. compare outcomes of surveys and CircleLytics Dialogue.

You can compare this and learn about dialogue:

- respons rates are usually higher, in less time, without pushing employees to fill in stuff

- employees invest heavily in writing/explainging via textual answers and recommendations

- dialogue is rated 4.3 out of 5 by over 50,000 employees now, on a consistent basis

- dialogue gets you to action 90% faster: simply compare cycle times of surveys vs dialogue

- ask managers: they’re not burdened with post-survey meeting requirements but turn recommendations from dialogues instantly into results; this will be seen by their superiors.

So, let’s examine some survey questions, and see how you can build dialogue on top of that.

Two more Qualtrics questions are:

“What is our company doing well?”

“What should our company improve?”

Here’s a few alternatives if your survey platform allows you to edit these questions.

“What is our company doing well and why is this meaningful to you?”

“What is our company doing well and how does this impact you?”

“What could our company improve according to you, and can you explain us in your own words?”

“What should our company improve and why do you pick this?”

This way, you accomplish two important aspects of open-ended questions:

- make people think harder and this way invest themselves deeper

- make results more valuable, by avoiding short-cutted answers or just one word

Now it’s time for dialogue!!

If your survey platform allows you to change the generic questions into – for example – our versions, you can simply export those open-text answers from the survey audience. Import these in the CircleLytics Dialogue platform, or use an API for a (semi/full) automated process. Start the dialogue. All respondents now receive first your personalized message inviting them to the dialogue. They will see others’ differing answers and let them vote these up and down. Our AI is driving the distribution of 100s or many 1,000s of answers in varying sets of 15 answers. People will also add recommendations to enrich their (up and down) scores. This way respondents learn to think even deeper and you increase their awareness of the challenges of some of the answers from coworkers.

CircleLytics’ AI and natural language processing techniques first read all open answers, compare and cluster these, upon which unique, varied sets of 15 answers are produced and offered to each respondent. This increases their learning, engagement and gets you their up/down votes ánd enrichments via recommendations, hence, gets you the much-needed actionability.

Management and HR will receive an instant, vetted-by-the-people result, contextualized by by the intelligence of the crowd of employees. This is way more valuable and significant compared to the mere result of natural language processing. Only humans, by reading, interpreting, reflecting and voting up/down, can produce natural language understanding. After all, it’s their value system and collective experiences that give specific meaning to language. That’s the power of dialogue: it’s the understanding of what people say and mean, that helps management to understand what to do next.

What did we examine and learn in this latter part?

- if for whatever reason, you keep your surveys, then we recommend to consider adding the open-ended part of any closed-end question

- this way you get more relevant, valuable insights to run the instant dialogue as followup

- with full respect of privacy for employees, you collect contextualized, vetted results and leading recommendations via the two-step dialogue process

- instead of mere language processing, you leverage your results to collect language understanding and prioritized recommendations, hence, what actions to take

- this two-step dialogue process proves to be highly engaging and appreciated by employees and managers.

And now let’s, finally, see how to followup your survey by dialogue, via a separate step.

For this, let’s learn this Philips case, explained in his own words, by one of the directors.

“At Philips, we conduct bi-annual employee engagement surveys. These are standard questions we ask Philips employees worldwide. This survey is intended to gauge the ‘temperature’, asking ourselves, ‘are we still on the right track? It does not yield any qualitative answers that drive my decision making today, because these are closed-ended questions that never vary. The textual answers remain unweighted: I don’t know what importance or sentiment others attribute to them, so I can’t derive reliable, decision-making value from it. We wouldn’t be able to make good comparisons with previous surveys if the questions were varying, so these global engagement surveys with generic questions make sense. But this also means that you cannot put forward specific topics to ask questions about for superior, faster decision-making. You will have to come up with another solution. The survey platform and surveys do not answer the ‘why’ and ‘how can we improve’ questions, to summarize it.”

and furthering:

“In a word, the survey is good for its purpose but not for decision making purposes. At Philips, we have high standards and strong ambitions, also when it comes to taking action where necessary. I wanted to gather more qualitative feedback that I could use within my team. So, I took the initiative to use the CircleLytics Dialogue. The Employee Engagement global team supported my choice because the engagement survey is not used for qualitative deepening, let alone co-creation, to tackle and solve (local) challenges together.

I used CircleLytics to ask concrete questions from two perspectives:

- I wanted to dive deeper into some (of the many) topics from the global engagement survey where my region achieved insufficient or very high scores. I wanted to understand the why of it all and learn what decisions are crucial.

- I wanted to tackle some issues in my own management agenda. I used CircleLytics for co-creation sessions with my people to make them aware, involve them in these issues, understand the root causes, and create solutions.”

Read here about the complete case, to learn how to followup your generic survey by two-step dialogues to dive deeper, and engage people in vetting others’ answers, while learning from these differing perspectives.

Now we’ve examined all instances:

1) replace engagement surveys by engaging dialogues and, if needed, keep surveys for focused research including external benchmarking,

2) followup your survey’s improved questions by an instant dialogue, and followup your survey’s result by a dialogue for specific items, and even for specific groups.

What triggered your thoughts most about our vision on people, listening and collectively move forward? What experiments are you open to? Reconstruct surveys, replace them, add dialogue, or other ideas?

Please contact us to exchange thoughts and analyse your company’s ambitions. We’re curious about your view on people, collective intelligence and why you want to step up your listening game.